On the Open Mind blog, carrot eater posted the following comment with an inline response from Tamino:

Are the offsets mu specific by month? I’m curious at what point you take out the seasonal cycle.

[Response: There’s a single mu for a given station. Only after combining stations do I compute anomalies to remove the seasonal cycle. Computing a separate mu for each month is a viable alternative, but forces all station records to have the same average seasonal cycle as the reference station. If one wishes to study the seasonal cycle itself, it’s better to use a single offset for the station as a whole.]

So, is Tamino correct that the difference is only important with regard to the seasonal cycle or is the situation more complicated than that?

In order to see how the two methods may differ, it is instructive to apply both of them to a set of “noiseless” data. For this purpose, we will construct a set of three hypothetical stations with 10 years of data each. We will also calculate the mean monthly temperature (which looks like station 2):

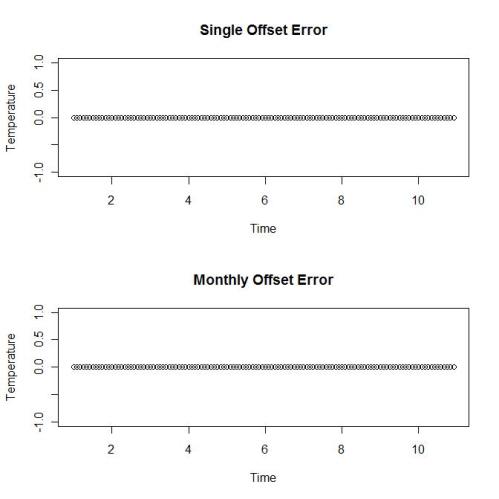

The data is pretty boring since each year is pretty much like the rest, but we will apply our two methods to these three stations and compare the results to the mean temperature sequence. For this purpose I have written some R functions (including a new one for the monthly offsets – it gives the same results as the anov.combine from my previous post on this topic) . The functions and the script for this analysis are included at the bottom of this post. Our results are as expected: both of the methods reconstruct the mean temperature series exactly:

It is pretty much true that when there are no missing data, virtually all of the methods are pretty much the same, so it is also important to see what happens when a single observation is removed. For this purpose we set the first observation of series three to be missing and run both procedures.

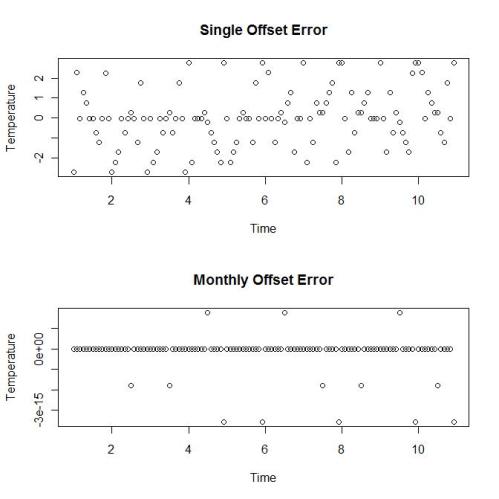

You will notice that the single method produces an error whereas the monthly method does not. What happens if more than one observation is missing?

As an experiment we will randomly select one station at each time and remove the value. One could remove two stations at some times and the results would pretty much stay the same. When we run the procedures and the plots:

The single offset errors are all over the place, but the monthly values are zero to 14 decimal places – only rounding errors from the calculations are present.

The problem is that in the presence of missing values, the single offset method works only when ALL of the stations monthly profiles are parallel. Otherwise, the method will produce errors which are a function of both which month and which set of stations is present at that time. So maybe this is an artificial example and real stations, say in the same grid cell have parallel profiles.

To answer that, I took the nine bolded stations from a collection which Tamino used in his Gridiron Games post:

| Station ID | Location | Latitude | Longitude |

| 10160355000 | SKIKDA | 36.93 | 6.95 |

| 10160360000 | ANNABA | 36.83 | 7.82 |

| 10160400001 | CAP CARBON | 36.80 | 5.10 |

| 10160402000 | BEJAIA | 36.72 | 5.07 |

| 10160403000 | GUELMA | 36.47 | 7.47 |

| 10160419000 | CONSTANTINE | 36.28 | 6.62 |

| 10160445000 | SETIF | 36.18 | 5.42 |

| 10160468000 | BATNA | 35.55 | 6.18 |

| 10160475000 | TEBESSA | 35.48 | 8.13 |

| 15260714000 | BIZERTE | 37.25 | 9.80 |

| 15260725000 | JENDOUBA | 36.48 | 8.80 |

| 62316539000 | CAPO FRASCA | 39.75 | 8.47 |

| 62316560000 | CAGLIARI/ELMA | 39.25 | 9.07 |

| 62316564000 | CAPO CARBONAR | 39.10 | 9.52 |

The reason that I used these nine is because rather than searching for the exact data he may have used, I merely took the ones in the list which were available from the Met Office release in January all of which I had already processed to make their use in R a simple matter:

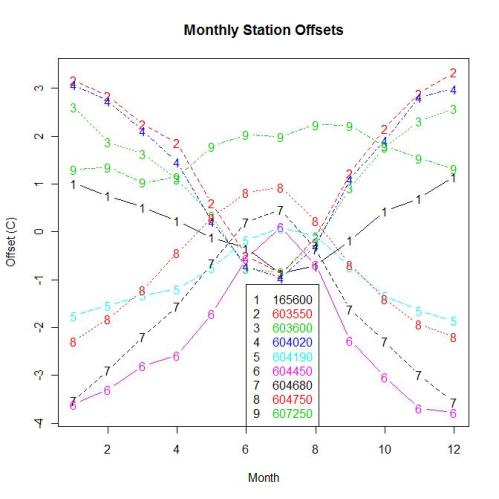

After running the monthly office program, I plotted the offset for each station:

It is pretty clear that some of the stations differ considerably from each other with regard to the pattern of differences at various times of the year. Applying a single offset to each station would simply be the wrong thing to do. I also think that Tamino is probably off the mark regarding the value of the single offset in the study of the seasonal cycle, but that is in issue to talk about at another time.

I have included the R script for the post below. The Algeria data is loaded by R during the running of the script.

[Update: After I posted, I also put up a comment on Open Mind which went as follows:

Tamino, I don’t think that your reply addresses the real issue, here.

The entire need for anything more than simple averaging is due to the fact that there are missing values in station records and the single offset is not capable of dealing those in most real situations.

You might wish to look at a post which I have put up on this topic:

https://statpad.wordpress.com/2010/02/25/comparing-single-and-monthly-offsets/

Your comment is awaiting moderation.

RomanM // February 25, 2010 at 6:57 pm | Reply

About a half-hour after it went up, the comment disappeared….]

##### Functions for calculating offsets and

# and combining station series

###function for calculating matrix pseudo inverse for any r x c size

psx.inv = function(mat,tol = NULL) {

# if (is.null(dim(mat))) return( mat /sum(mat^2))

if (NCOL(mat)==1) return( mat /sum(mat^2))

msvd = svd(mat)

dind = msvd$d

if (is.null(tol)) {tol = max(NROW(mat),NCOL(mat))*max(dind)*.Machine$double.eps}

dind[dind<tol]=0

dind[dind>0] = 1/dind[dind>0]

inv = msvd$v %*% diag(dind, length(dind)) %*% t(msvd$u)

inv}

### single offset calculation routine

# also used by monthly routine

calc.offset = function(tdat) {

delt.mat = !is.na(tdat)

delt.vec = rowSums(delt.mat)

co.mat = diag(colSums(delt.vec*delt.mat)) - (t(delt.mat)%*%delt.mat)

co.vec = colSums(delt.vec*delt.mat*tdat,na.rm=T) - colSums(rowSums(delt.mat*tdat,na.rm=T)*delt.mat)

offs = psx.inv(co.mat)%*%co.vec

c(offs)-mean(offs)}

### generally assumes that tsdat starts in month 1 and is a time series.

monthly.offset =function(tsdat) {

dims = dim(tsdat)

off.mat = matrix(NA,12,dims[2])

dat.tsp = tsp(tsdat)

for (i in 1:12) {

dats = window(tsdat,start=c(dat.tsp[1],i), deltat=1)

offsets = calc.offset(dats)

off.mat[i,] = offsets }

colnames(off.mat) = colnames(tsdat)

rownames(off.mat) = month.abb

nr = dims[1]

nc = dims[2]

matoff = matrix(NA,nr,nc)

for (i in 1:nc) matoff[,i] = rep(off.mat[,i],length=nr)

temp = rowMeans(tsdat-matoff,na.rm=T)

pred = c(temp) + matoff

residual = tsdat-pred

list(temps = ts(temp,start=c(dat.tsp[1],1),freq=12),pred =pred, residual = residual, offsets=off.mat) }

###

#Single offset

###

single.offset =function(tsdat) {

offsets = calc.offset(tsdat)

names(offsets) = colnames(tsdat)

temp = colMeans(t(tsdat)-offsets,na.rm=T)

nr = length(temp)

pred = temp + matrix(rep(offsets,each = nr),nrow=nr)

residual = tsdat-pred

list(temps = ts(temp,start=c(tsp(tsdat)[1],1),freq=12),pred =pred, residual = residual, offsets=offsets) }

############ Script for the post

#create three noiseless series

t1 = rep(1:12,10)

t2 = 2*t1

t3 = 3*t1

temps = ts(cbind(t1,t2,t3),start=c(1,1),freq=12)

meantemp = ts(rowMeans(temps),start=c(1,1),freq=12)

par(mfrow=c(2,1))

matplot(time(temps),temps,type="l", main = "Noiseless Temperature Series",xlab="Time",ylab="Temperature")

plot(meantemp,main = "Mean Temperature Series",xlab="Time",ylab="Temperature")

#combine series using single offset

no.miss.1 = single.offset(temps)

#combine series using monthly offset

no.miss.mon = monthly.offset(temps)

plot(no.miss.1$te - meantemp,type="p",main = "Single Offset Error",xlab="Time",ylab="Temperature")

plot(no.miss.mon$te - meantemp,type="p",main = "Monthly Offset Error",xlab="Time",ylab="Temperature")

######introduce a missing value in third sequence at time 1

temps1.miss = temps

temps1.miss[1,3] = NA

#combine series

miss.1 = single.offset(temps1.miss)

miss.1.mon = monthly.offset(temps1.miss)

plot(miss.1$te - meantemp,type="p",main = "Single Offset Error",xlab="Time",ylab="Temperature")

plot(miss.1.mon$te-meantemp,type="p",main = "Monthly Offset Error",xlab="Time",ylab="Temperature")

#introduce more missing values

#randomly select a station to remove at each time

extra.miss = temps

sequ = sample(c(1,2,3),120,replace=T)

sequ # results will likely differ when repeated

# [1] 1 3 2 3 3 2 2 3 3 2 1 2 1 1 1 2 1 2 1 2 3 1 2 3 2 1 1 2 1 2 1 3 2 1 2 3 3

# [38] 1 2 2 2 3 3 3 3 3 3 1 2 1 1 1 2 3 2 2 3 1 2 1 2 3 2 1 2 3 3 1 1 3 2 2 3 1

# [75] 2 1 3 3 1 1 1 1 3 1 3 2 1 3 1 3 1 1 1 2 2 2 3 2 1 3 1 3 3 3 3 3 1 1 3 3 2

#[112] 3 3 3 1 3 3 1 2 1

extrat[cbind(1:120,sequ)]=NA

#combine series

miss.3 = single.offset(extrat)

miss.3.mon = monthly.offset(extrat)

plot(miss.3$te - meantemp,type="p",,main = "Single Offset Error",xlab="Time",ylab="Temperature")

plot(miss.3.mon$te-meantemp,type="p",main = "Monthly Offset Error",xlab="Time",ylab="Temperature")

par(mfrow=c(1,1))

######

#Algeria data offsets

#Data is from met office release in January, 2010

algdat = dget(url("https://statpad.files.wordpress.com/2010/02/algdat.doc "))

#plot station data

plot(algdat)

#calculate monthly offsets and plot

algeria = monthly.offset(algdat)

matplot(algeria$off,type="b",col=c(1:6),lwd=1.8,main ="Monthly Station Offsets", ylab = "Offset (C)",xlab = "Month")

legend(6,-1.1,colnames(algdat), pch=as.character(1:9),text.col=c(1:6))

Nice work. I ran a global gridded version last night. Some series cause the algorithm to crash. I haven’t figured out what the problem is though and wrote simple workarounds to see the result.

Your monthly station offset is a lot like the one’s I calculated. Months 6-8 are more similar to each other with the ends varying. It might be fun to see their locations to geographic features.

Re: Jeff Id ,

Thanks for the compliment.

I updated the bottom of the post. After I wrote and posted it, I put a comment on Open Mind telling Tamino it was here. Gone in 30 minutes…

Sigh…

With regard to the algorithm crashing, try running the function monthly.offset in the script above instead of anov.combine.

I just tested it on two non-overlapping series and it did fine. You also need the two function psx.inv and calc.offset, both of which are given above as well.

Tell me if you have any more trouble with it.

[Update: The monthly.offset function seems to hiccup when the input is a single time series. It would not be difficult to fix since the output “combined” sequence is the input itself. All that would have to be added is the predicted (same as the input), offsets (all zero) and residuals (also all zero)]

Well,

If Tamino ever publishes it will be interesting to see if he ever changes his approach and credits you or not.

I don’t care about the credit. I’ve had (and continue to have) a lot of fun analyzing the methodology – it’s the kind of thing I have done for over forty years.

Frankly, I don’t think his approach is very good as is, not only from the aspects that I have discussed here, but also from the viewpoint of error bars. Because his method leaves seasonal effects in the residuals, he would likely overestimate the variability of the station data.

I had genuinely thought that he could put aside his dismissive arrogance and discuss the mathematics, but I guess that might go against the “skeptics-is-ignorant” meme so often seen on Open Mind amd be seen as a weakness on his part. Silly me.

Pingback: Roman, Seasonal Anomaly Offset « the Air Vent

Dear Sir;

I would really like to understand the point here.

I see that stations 1,2,3,4 have one seasonal polarity of monthly offsets, 5,6,7,8 have the opposite polarity pattern of monthly offsets, and 9 is ~.

And, BTW, the offsets are not small fractions of a degree.

Is this a result of the pattern of missing months? I assume not.

I assume that a different group of stations would have different values for the monthly offsets.

What would this look like with, say, 1,2,3,4 omitted, etc. ? I assume 5,6,7,8 become ‘butterflied’ around 0 offset. Is it useful to figure out where these things are? I can maybe do that…

,

Are ‘we’ surprized that months 6-8 have collectively ‘smaller’ offsets than the others? Is there an obvious reason that I am overlooking? (Is it ‘shared’ air masses?)

But if I’m getting it, this is a way to ‘combine’ stations within an area, without that distasteful ‘homogenization’ process where all history is rewritten, and the possibility for insight lost therewith. Yes?

And those elusive ‘covariance measures’ are more readily explored without the twin masks of anomalization and homogenization. Yes?

OK, it uses a bit more computer memory to keep the monthly offsets for each station. But ‘we’ can keep the raw data without revising history.

I greatly appreciate your open endeavor, in contrast with those weisenheimers who know it all but won’t discuss it with lower lifeforms who seem to be such a threat.

RR

Re: Ruhroh ,

What is important to recognize here is that it the the difference between two station offsets that tells us about the relationship varies throughout the year.

In my previous post, I gave an example of one station being located at the edge of a large body of water and the other inland. The inland station might very well have hotter summers and colder winters than the shore station/ This would reflect in different patterns for the two stations.

My suggestion is to plot the coordinates ogf the nine stations on a map to see if there is a geographical reason for the similarities and/or differences in the patterns of the offsets.

As far as “homogenization goes, beyond correcting obvious errors, this should become part of the combining process rather than picking arbitrary stations to use for spurious “adjustments”.

Covariance occurs at two levels, one is the large variation caused by seasonal changes. The other is the subtler smaller changes due to regional climate effects. Calculating these relationships can be a tough problem.

Hi,

Tamino has finished calculating the area weighted average time series of annual averages. How much do your methods for combining stations differ from his in the final result ?

Re: Halldór Björnsson ,

I have no idea how they might differ. I have spent much of my recent effort on working out theoretical and practical issues in this methodology and not much time applying this to the various data sets. Jeff Id on the Air Vent has been doing some, but he hasn’t finished.

I didn’t know that Tamino had finished anything yet. Since he hasn’t been particularly open about how he does things, it is hard to say whether he has done things correctly.

I know from experience that seasonal cycle can vary from station to station, and you are clearly correct that one can not assume a priori that station differences are fixed.

Land vs. Maritime effects are one obvious cause, and these can be surprising. Even among nearby coastal stations, differences may arise. I once noticed this in two stations that are ~30 km apart, one located on the edge of a peninsula and the other at the bottom of a bay. Similar distance to coast but different distance to “open ocean”.

However, it is not obvious that a seasonal cycle in the station differences will strongly affect the results when you calculate the annual average temperature change.

I often find that different methods will lead to very similar end results when analyzing data. And this is of course preferable.

(As a rule of thumb I distrust results where the signal can only be seen using one method and not others, – a strong signal in geophysics should be robust.)

However, if we are talking about monthly values then clearly fixed station differences could become “an issue”.

What needs to be stressed here is that the problems arise because of missing values (over months and/or years) in the station temperature series.

If no values are missing, then you are right that a seasonal cycle in the station differences will not typically affect the results when calculating the monthly mean. However, when one or more are missing at that time, then the way that a particular method accommodates this comes into play.

It is fairly obvious that the accuracy of simple averaging of the available values will be strongly affected by the month in question when the stations vary differently over time. Anomaly methods handle this by looking at station values relative to the behaviour of that same station during a common period for all stations. This can only work if all stations are available during a common time period which is often not the case.

One can think of this method as comparing all pairs of stations and then determing what the “best” anomalisng basis might be for each from all of these comparisons. however, when the stations are combined the end result is not an anomaly, but an estimate of the “mean temperature”. My demonstration above with the artificial data set indicates that the method will lead to statistically unbiased results for the monthly model in my previous post.

Roman, I think I understand why your method works (better) after reading your examples above. What happens if a whole year is missing (or ignored) in one of the stations?

By the way when I click onto to your site here, Roman, and that water scene with the swimming geese (Canadian no less) comes up, I immediately feel more relaxed. Does it have that effect on others?

Kenneth, if a whole year is missing, it is no different than if one month is missing. Each missing month is offset separately from the others and is not affected by the months around it. The down side is that all missing values will serve to increase the error bar for the estimated combined value at that time.

Yeah, on me. I like that picture. Did you count the total number of goslings for the three nesting pairs?

I get to look at that scene quite often, since I took the photo from my back deck about a year and a half ago when they lazily swam by on a nice summer afternoon. 🙂

Tamino has already said that he ran the numbers and that you have a better approach. He also said that the difference does not affect the conclusion.

sorry. Tammy has no credibility on this issue.

when he posts up turnkey code, i’ll look.

“He also said that the difference does not affect the conclusion”: that is what I call The Great Climate Science Invariance. Differences never affect conclusions. Presumably that’s because the conclusions are God-given.

Pingback: Global Gridded GHCN Trend by Seasonal Offset Anomaly Matching « the Air Vent

Roman when you say:

“It is fairly obvious that the accuracy of simple averaging of the available values will be strongly affected by the month in question when the stations vary differently over time. ”

That was my take away from the examples you gave. My question above was would there be a difference between your method and Tamino’s if a whole year’s worth ot data were missing from one of the stations used in combining data.

OT: I live 35 miles from a large body of water (Lake Michigan) but whenever I am in near proximity of a larger body of water and without the hustle and bustle of a big city interfering with the experience, like a lot of the Chicago lake front, I seem to immediately relax. It could be a conditioned response from past (enjoyable) experiences, but anyway it works for me.

My answer was the answer to that question. As an example, I ran the procedures for the artificial data in the head post, this time removing all of the values for station three in year three, but leaving everything else the same. The resulting graph on the error in reconstructing the mean looks like:

The errors in the single offset decrease as the omitted values in station three increase.

I forgot to add to my post above that if monthly is better than yearly, is weekly better than monthly and daily better still.

Even at an advanced age a SA will continue to be a SA.

Also do you, Roman, set the default font size for writing posts? Mine comes out quite large and quite welcomed by a pair of old eyes. My experiment of a second ago shows that I could write an answer – and read it – without using my eye glasses.

The smaller you make time periods, the more you increase the number of parameters involved. For daily, the number of offsets is 365n where n is the number of stations. That would definitely be overkill. There is probably a case of diminishing returns involved, but I haven’t looked at what an optimum time interval might look like in detail.

The font is a result of the default settings of either WordPress or your computer. I didn’t do anything to alter that.

This should sharpen the resolution of the insturmental record as a metric and analytical tool of climate science. Roman, I would urge you to consider publication.

IMHO, proper publication would require a comparison of the statistical properties of the procedure with other previously methods as well as application to some of the temperature data sets. At the moment, that would require lots of effort on reading through and digesting the literature. It was something I was never fond of when doing research before my retirement since all the fun was in the chase – figuring out the math problems and fine tuning the results. After that, it was too much like work.

I would consider it if we could talk somebody like Craig or Hu into co-operating on it. On the other hand, maybe Tamino needs a co-author… 😉

Sorry for the OT Roman. I have been engaged in discussion with your old buddy Tom P here:

http://shewonk.wordpress.com/2010/02/24/enron-and-the-zombie-fungus/#comments

I have cited your CA post “RCS – One Size Fits All” in my discussions with him. Take a peek if you have time.

That Tom P is hard guy to talk to. He really does have an obsession with Steve M and makes the same request over and over. He apparently does the same about how he interprets his own analysis and without responding to (critical) points being made.

I asked him if he could provide me the methods used by dendroclimatologists to determine the uncertainty of the measurements of tree ring widths based on the replicate samples that they often take from the same tree. I have found that the variability of these replicate TRWs to be large compared to what is being attempted to be resolved for their studies. I have not been able to find any published accounts of how this variability is handled in reporting dendro results.

I have made this same request to a visiting dendrochronologist at CA and he left without answering. I need to do more analyses myself and more searching for an explanation.

Good luck in trying to get an accounting of uncertainties due to trw sample delta’s from Tom 😉 .

I give up too. He simply won’t listen to any sort of argument making off the cuff statements as if they are fact.

I also have posted at Tamino’s (about three times). Each time I was rejected.

It seems that you have to be on the bandwagon to talk with Tamino.

Climategate prompted me to look at the various sites pro and con the AGW hypothesis.

There is agreement that CO2 causes a warming effect based on the absorption curves. Call it 1 degree C. After that the arguments begin.

I have been unable to find any definitive evidence or proof linking CO2 to anything other than plant growth response as a function of concentration. Everything else seems to be supposition, assertion or hypotheses.

What am I missing? I lack the math skills to follow too technical an explanation, but do ok on an If-Then-Else logic exposition. Anyone know were to find one?

Also, I seem to able to ask this type of question on sites that question one one or more aspects of AGW, but get banned or flamed on sites that do not? Is this common?

You can certainly ask such a question on this blog, but you won’t get an answer from me since that is not my area. here we specialize in the statistical analysis of climate data and leave the CO2 theory to others.

Try the Air vent or WUWT for sites with freedom to explore. However, I should wsay that I doubt you will find a “convincing proof” that CO2 will take us to oblivion. I haven’t seen one yet.

OK

Terry

Is this what you are looking for?

http://www.realclimate.org/index.php/archives/2007/08/the-co2-problem-in-6-easy-steps/

And if you want a more detailed explanation,

see “Science of Doom”s 8 part series on CO2 (last part not yet posted):

[This and your previous comment are completely OT and distract from the matter at hand. I will leave them up (at least for the time being) but I do not wish for them to be discussed here. Please go to the linked blogs if you wish to continue the topic.

Any further comments in this direction in this thread will be deleted].

I never pass up an opportunity to ask about the use of annual data versus monthly for temperature series. I tend to use yearly data when the series is relatively long. I have no good reasons that are grounded mathematically or statistically for my choice, nor would one expect one from a layperson with my background.

I have, however, noted that while monthly data can provide more degrees in freedom in for example, estimating trends, the higher auto correlation of monthly data, when adjustments are made, can effectively reduce these degrees of freedom to that for using annual data. I have always been told that the best method of handling auto correlation of regression residuals is to avoid a model that produces auto correlation. That advice I assume stems from the uncertainty that an auto correlation adjustment to the degrees of freedom can produce.

I normally use annual data that has been adjusted to anomalies by determining monthly anomalies over the period of the time series and then weighting the months for having varying numbers of days and calculating an average annual anomaly from the result. All these calculations provide a good average, I think, as long as some months are not missing. I tend to attempt to use data with no missing months but that is not always possible with some data sets.

If only a few months worth of anomaly data are missing in a long temperature series, I think the error that arises from this situation is partially mitigated by the fact that temperature anomaly changes occur more on an annual basis than a monthly one. Also the monthly temperatures used to calculate the anomaly should not be influenced much by a few missing months worth of data.

Having said that, I am aware that globally January 2010 was the hottest on record after a not so spectacularly warm 2009. I suppose someone like me, without substantial theoretical grounding in these matters, should look to some numerical analysis of simulated temperature series.

Good Stuff Roman. I have been mucking around with trying to make sense out of combining stations for months.

The one that has me pulling my hair out is Halls Creek in Western Australia which is one used by Jones in his original paper.

In the 1940’s the town moved 12 km west and 63metres uphill.

Plotting the two stations , the BOM Homiginisation (this station is part of the new Austrailian “Quality” reference network) and trends gives this:

At first glance it appears to be instrument error but analysis by month gives this

Remember that July is the middle of winter in the SH and the effect most severe in winter but measurable in autumn & spring.

Checking the topography the old site is in a dip and surrounded by higher terrain.

I was certainly surprised by the big difference in sites so close but in a location where it is assumed that the country is much the same.

It appears that in winter that the cold air is trapped in the basin giving substantially colder minimums.

I think it is time for me to put away the ever increasing spreadsheet and become proficient in R

“I had genuinely thought that he could put aside his dismissive arrogance and discuss the mathematics, but I guess that might go against the “skeptics-is-ignorant” meme so often seen on Open Mind amd be seen as a weakness on his part. Silly me.”

No, it’s the ‘skeptics are abusive bastards’ that is the problem. You are indeed silly if you can’t see that. The abuse and personalisation of attacks on individuals is anti-science.

[Wow! I never realized that! We can learn something new every day – even from someone who needs to use a fake email address.]

Pingback: GHCN Global Temperatures – Compiled Using Offset Anomalies « the Air Vent

Pingback: Better Late Than Never | Trees for the Forest

Pingback: D’Aleo at Heartland: An Apple a Day « The Whiteboard

Robert, in reply to your comment in another thread:

I presume that what you are referring to as Tamino’s method is what was discussed in my original post on the topic in this thread. If so, my version would basically not be different as far as the end results go. Applying it to a set of annual series is basically the same math as calculating for a single month when dealing with monthly series.

Initially, Tamino suggested that a single offset would work on monthly data and the intent of this post was to show that this was not a good idea because the differences between stations may vary widely from month to month due to systematic factors.

One of the main advantages for using the monthly offsets is to deal with the very common problem of scattered missing monthly values. Otherwise, the data for the entire year would need to be excluded, or at best, it would have to be infilled. Such infilling can introduce artefacts which produce further distortions in the data.

The portion of the R program which should work well for calculating annual resolution offsets would be this portion:

tdat is the matrix whose columns consist of the various annual series.

###function for calculating matrix pseudo inverse for any r x c size

005

006 psx.inv = function(mat,tol = NULL) {

007 # if (is.null(dim(mat))) return( mat /sum(mat^2))

008 if (NCOL(mat)==1) return( mat /sum(mat^2))

009 msvd = svd(mat)

010 dind = msvd$d

011 if (is.null(tol)) {tol = max(NROW(mat),NCOL(mat))*max(dind)*.Machine$double.eps}

012 dind[dind0] = 1/dind[dind>tol]

014 inv = msvd$v %*% diag(dind, length(dind)) %*% t(msvd$u)

015 inv}

016

017 ### single offset calculation routine

018 # also used by monthly routine

019

020 calc.offset = function(tdat) {

021 delt.mat = !is.na(tdat)

022 delt.vec = rowSums(delt.mat)

023 co.mat = diag(colSums(delt.vec*delt.mat)) – (t(delt.mat)%*%delt.mat)

024 co.vec = colSums(delt.vec*delt.mat*tdat,na.rm=T) – colSums(rowSums(delt.mat*tdat,na.rm=T)*delt.mat)

025 offs = psx.inv(co.mat)%*%co.vec

026 c(offs)-mean(offs)}

[Note that I have made a slight change in line 012 from the original script in the head post]

Hey Roman M,

I was wondering was there a specific format the data would have to be in (particularly the columns and headers?) in order to use the method you outlined above for annual temperatures. I can’t seem to get the script working and I can’t really figure out why. The tdat function, is that associated with a particular R package that maybe I did not download?

Sorry about that. It appears that copying the script out of the head post introduce some format problems. Try this:

###function for calculating matrix pseudo inverse for any r x c size

psx.inv = function(mat,tol = NULL) {

# if (is.null(dim(mat))) return( mat /sum(mat^2))

if (NCOL(mat)==1) return( mat /sum(mat^2))

msvd = svd(mat)

dind = msvd$d

if (is.null(tol)) {tol = max(NROW(mat),NCOL(mat))*max(dind)*.Machine$double.eps}

dind[dind>tol] = 1/dind[dind>tol]

inv = msvd$v %*% diag(dind, length(dind)) %*% t(msvd$u)

inv}

### single offset calculation routine

# also used by monthly routine

calc.offset = function(tdat) {

delt.mat = !is.na(tdat)

delt.vec = rowSums(delt.mat)

co.mat = diag(colSums(delt.vec*delt.mat))-(t(delt.mat)%*%delt.mat)

co.vec = colSums(delt.vec*delt.mat*tdat,na.rm=T)-colSums(rowSums(delt.mat*tdat,na.rm=T)*delt.mat)

offs = psx.inv(co.mat)%*%co.vec

c(offs)-mean(offs)}

The columns of the matrix tdat are the annual temperature series.

Hey Roman,

from what I can see the SVD in R does not like missing values. I know you wouldn’t use infilling so how do you deal with missing values in the annual temperature grid for example?

Robert

I don’t understand how the first part of your statement relates to the second.

I assume that you are referring to the script which I posted above yesterday. The tdat temperature matrix is allowed to contain missing values. However, the svd function in psx.inv is not applied to tdat, but to another matrix which does not contain any missing values so there is no math problem here.

Pingback: The Blackboard » Another land temp reconstruction joins the fray

Pingback: Proxy Hammer « the Air Vent